I’m putting this list out there with all humility. I’m not an expert; I’m a learner, just like many of you. These are lessons I’ve picked up while building my own GPTs in ChatGPT, informed by trial and error, conversations with peers, and a lot of debugging at 2 a.m.

I graduated with a computer science degree. My first job after collect was as an entry-level software developer. And I think, like a lot of software developers, we are making the mistake of trying to program and iterate on our GPTs the same way we wrote code.

For example, an IF-THEN-ELSE statement is absolutely black and white. You’re going to get consistent output. A try-catch block? Same deal.

But when you’re creating a GPT, you don’t always get consistent output. There’s a huge element of variability in there. So how can we stop relying solely on our strengths as developers and start using other strengths from across our fields (like teaching, debugging, coaching, or design thinking) to make better GPTs? Here’s my list.

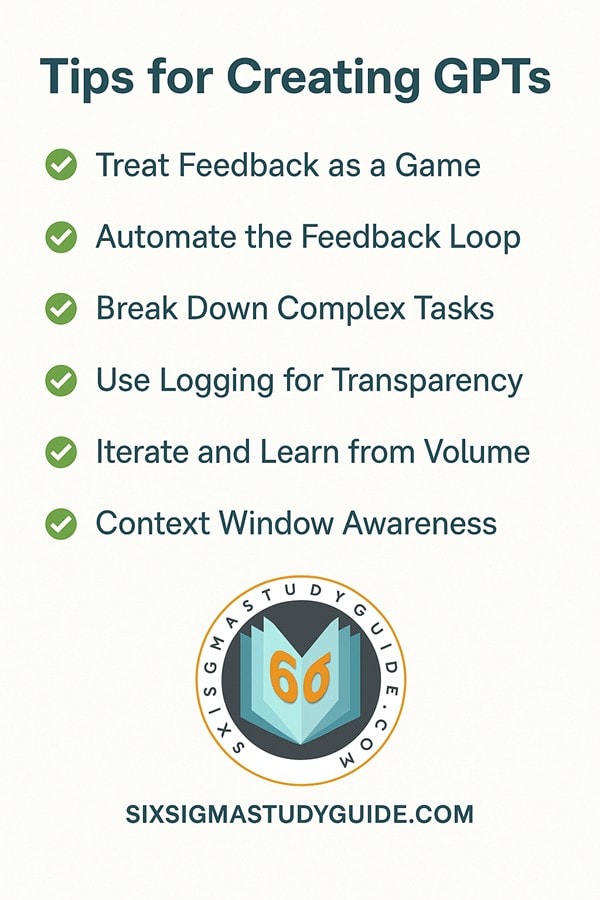

1. Treat Feedback as a Game

When I was just starting out as a developer, I had a tech lead who treated every single line of my code like it was wrong. I’m not exaggerating. But instead of getting discouraged, I turned it into a game: every time he called out a mistake, I wrote down the “rule.” Pretty soon I had a list of 100+ mini-rules (my own personal playbook).

I use the same mindset when building GPTs.

This approach mirrors the Lean philosophy of Kaizen, which emphasizes small, incremental changes for continuous improvement.

Each error or weird output? That’s feedback. That’s a rule I didn’t clarify. That’s something I can fix. If you treat it like a game, you’ll not only survive the process, you might actually enjoy it!

2. Automate the Feedback Loop

Eventually, I started thinking: can I automate that feedback process?

That’s what I aim for with GPT creation. I try to build in referential loops, structures that help the GPT check its own work. For example, I’ll ask it to explain its reasoning step-by-step before it gives a final answer, or to compare multiple drafts before selecting one.

Just like in the Plan-Do-Check-Act loop, this cycle depends on iterative feedback. There’s also an element of Poka-Yoke, or error proofing here. If you can include automatic feedback to the GPT when an error occurs, you can prevent further mistakes.

3. Break Down Complex Tasks

Teaching a GPT is a lot like parenting.

When I tell my kid, “Go upstairs, put away your clothes, and take a shower,” he hears the first part, gets distracted by a comic book, and forgets the rest. That’s not his fault; it’s too many instructions at once.

Same thing with GPTs. If you give it a massive prompt with 12 steps and expect brilliance, you’re setting yourself up for disappointment.

Instead, break it down.

- Step 1: Sort the user input.

- Step 2: Apply the rule set.

- Step 3: Format the result.

See if you can daisy-chain GPTs together.

Simple, sequential, understandable.

4. Use Logging for Transparency

Back when I was struggling with unfamiliar codebases, I’d throw in print statements just to see what was going on. “Am I even hitting this line? Did this function run?” It felt clunky, but it worked.

Using logging and telemetry fits naturally into several Six Sigma principles, especially those that emphasize data-driven decisions, real-time monitoring, and process control.

Now I ask my GPTs to do the same.

I build in prompts like, “Tell me what you’re thinking at each step,” or “Explain why you made this choice.” It’s a kind of logging. I don’t just want the answer; I want to understand the path it took to get there.

That transparency helps me debug, refine, and trust the model over time.

5. Iterate and Learn from Volume

Let’s be honest: your first few GPTs might be junk. Mine were. That’s fine.

What helped me was pushing out volume. I’d run lots of examples, test every edge case I could think of, and watch what broke. Then I’d tweak the prompt, adjust the system message, or restructure the flow.

Over time, I started seeing patterns. Even when I didn’t fully understand why something worked, I could still refine it based on results.

You don’t need to be a genius to make progress; you just need volume and persistence.

Just like the Control Phase in DMAIC, the goal is to sustain improvements. Here, I want to reduce how often I have to intervene in the GPT manually. If you can embed smart checks and balances into your GPTs, like you would Control charts and KPIs in Six Sigma, you’re removing the human bottleneck and letting the system level itself up faster.

6. Mind the Context Window

I’ve noticed something with both my kids and my GPTs: if you give them too much input at once, they get confused.

That’s because models (and humans!) have limited context windows. Flooding them with too much data or too many goals leads to overload and failure.

So I’ve started designing multi-step flows with multiple agents. Each one handles a smaller, digestible chunk of the task. Instead of asking one GPT to summarize, analyze, revise, and respond, I’ll hand off those responsibilities to different “roles” or use chained prompts.

Keep your GPTs light and focused, and they’ll perform better.

I like to combine this principle with the logging practice described earlier. Understanding how GPT behavior shifts with longer prompts is part of hearing the “voice” of the model. Voice of the Process helps you monitorhow your process is performing in real time. That gives you your best chance success.

7. Everyone Starts Somewhere

When I first started coding, I was embarrassed by how basic my tools were. I felt like an imposter using console.log or println() to debug. But the truth is, everyone starts there. Everyone who didn’t give up went through that same awkward phase.

It’s the same with GPTs.

If your first attempts feel clunky, if you’re embarrassed by your system prompts, if you’re copy-pasting from other GPTs just to see how they work , that’s fine. That’s normal. That’s how learning happens.

You only get better by doing.

Final Thoughts

I’m not sharing these tips because I’ve mastered the craft; I’m still in the arena. But if any of these ideas give you a shortcut, a bit of clarity, or the confidence to keep going, then it’s worth it.

GPTs are the most powerful tools I’ve ever worked with. But they’re also tricky. The more we learn out loud, the faster we all improve.

If you’re building GPTs, I’d love to hear what’s worked for you (or what hasn’t).

Let’s build smarter.

When you’re ready, there are a few ways I can help:

First, join 30,000+ other Six Sigma professionals by subscribing to my email newsletter. A short read every Monday to start your work week off correctly. Always free.

—

If you’re looking to pass your Six Sigma Green Belt or Black Belt exams, I’d recommend starting with my affordable study guide:

1)→ 🟢Pass Your Six Sigma Green Belt

2)→ ⚫Pass Your Six Sigma Black Belt

You’ve spent so much effort learning Lean Six Sigma. Why leave passing your certification exam up to chance? This comprehensive study guide offers 1,000+ exam-like questions for Green Belts (2,000+ for Black Belts) with full answer walkthroughs, access to instructors, detailed study material, and more.